The Challenges of Virtual Reality Video Localization & Accessibility

What 2018 Will Mean for Voice-Over, Dubbing & Subtitling Localization

January 3, 2018

5 Reasons Text-To-Speech Will Be the Standard for Accessibility Audio

January 17, 2018Virtual reality video – also known as 360-degree video – has gone mainstream in the last year. There are several prosumer-level 360 cameras on the market, and the videos are supported by YouTube, Vimeo and Facebook. Naturally, multilingual audiences are clamoring for them. But the videos have critical challenges to multimedia localization and accessibility, in particular when it comes to dubbing, captioning and subtitling – some of which don’t have solutions currently.

This post will look at the challenges that virtual reality video poses to localization.

[Average read time: 4 minutes]

First – what are virtual reality videos?

Simply put, they are immersive videos that cover a 360-degree view – that is to say, they show every direction around a single fixed point, to mirror what a person sees when he or she stands in one spot and makes a 360-degree turn. They’re shot with special cameras that record every point around them, or with multiple cameras whose images are stitched together. Audiences can watch them on a computer or cell phone screen, usually with a mechanism that allows them to scroll through the image with their finger or a mouse – or, with a headset that changes the on-screen view to mirror a user’s head movements.

As with all visual media, it’s better to see it that to read a description. YouTube’s Virtual Reality channel has a great selection of different VR videos that you may want to check out before going further.

The challenges to dubbing, captioning & subtitling

So how do these videos disrupt the above services? Let’s look at the three main ways.

1. Technological limitations

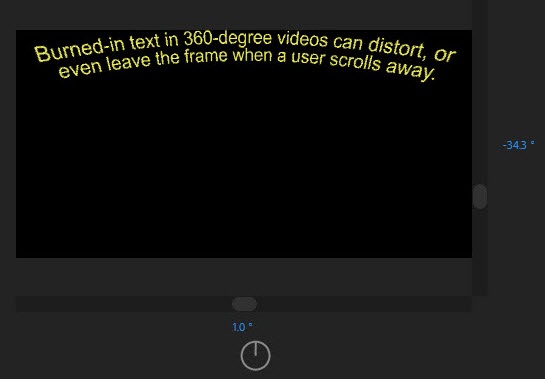

Much of the technology needed to support localizing and making these videos accessible is either non-existent, or just coming online. YouTube started supporting VR captioning relatively recently, and most video editing programs only support fixed-position text, meaning that viewers lose sight of text content if they move away from it, and that the text gets distorted as the user moves it away from center position – both of which you can see in the following still from a 360-degree video editing timeline:

In short, the options for accessibility text are very limited. On top of that, because many of these videos have audio recorded with omnidirectional microphones, it’s very difficult to create a mix or to separate music and effects tracks – both crucial for the video dubbing process.

While technological factors are currently limiting what can be done with these videos, they should be resolved relatively quickly. The real challenge will be to post-production workflows.

2. Post-production workflow issues

For dubbing, the main challenge will be developing a way to handle all of the audio needed to re-create a 360-degree video, especially once this format is used for narrative content. For example, it’ll make sense for source mixes to raise and lower volumes to correspond with what’s being seen in the frame – if you have two speakers in a scene, having the one in frame be louder, effectively. Dubs will have to replicate this kind of leveling, and make it dynamic so that it corresponds to what users are actually seeing at any given moment.

Captioning and subtitling will have even more workflow challenges, because the text that appears on screen often relates to the visuals. For example, the placement of a caption is often changed so that it doesn’t don’t block critical elements in the frame, like a sign or an on-screen title. If a user can change the frame at will, though, there’s no way to keep this from happening. Another example – characters that are off-screen are represented differently in captions from characters that are visible. This is a crucial standard for caption and subtitle intelligibility. But how do you do this when users can decide who’s on-screen and who’s off-screen – at any point in a video? These questions, along with several others, will have to be resolved as part of any post workflow.

3. Localization & accessibility standards

The sum effect is that 360-degree videos will need their own standards and industry guidelines. This will require research into how users interact with the videos themselves, and the development of best practices for all services, including on-screen titles and graphics replacement, as well as audio description. The BBC, in fact, has already started doing user preference tests to determine exactly what to do with its growing roster of VR content. As these videos start to be used extensively, agencies and advisory groups will have to develop guidelines for accessibility compliance.

So what can you do now for multimedia localization projects?

First, be aware of the technological limitations – many productions may assume that they can proceed with post-production as usual. Second, know that there are workarounds available – JBI Studios, in fact, has developed special workflows for VR videos, as part of our suite of video localization solutions. Third, know that this is a temporary state of affairs. Virtual reality video technology is improving every day – it’s possible that the right technology will be place when you’re ready to dub, caption or subtitle 360-degree content. And finally – start planning for multimedia localization or accessibility during production. Small tweaks during the mix can make your voice-over recording much more cost-effective. Likewise, an early decision on distribution platform may affect your caption or subtitles options. It’s generally true that proper planning will minimize the costs and timeline of any internationalization strategy – but when dealing with a new technology like virtual reality, an early start is crucial to a project’s success.